The need for quality software never goes away. When it comes to addressing application performance issues, it can be a make or break decision. As systems become more complex, it creates more opportunities where quality can be compromised and performance can degrade over time.

I’ve been involved in the performance engineering industry for many years, and I have noticed patterns in corporate spending around overall quality initiatives. There are different motivations and different levels of motivation. I have found it interesting to see what creates the urgency for performant software and why companies do and do not invest in it.

In this article, we’ll take a look at what leads companies to invest into performance engineering, and why businesses sometimes don’t feel they’ll benefit from performance optimization.

How do businesses make decisions?

Anytime a company spends money for something, there are generally only two reasons:

- They want to get something. This could be great profit margins, grabbing market share, or leverage of some kind. In rare cases, it is because it is simply the right thing to do.

- They are afraid of losing something. Maybe they are trying to prevent the loss of money, customers, or being overtaken by their competitors.

While this is an over-simplification at best, it’s generally why people do anything in my experience.

Why Should We Care About Performance?

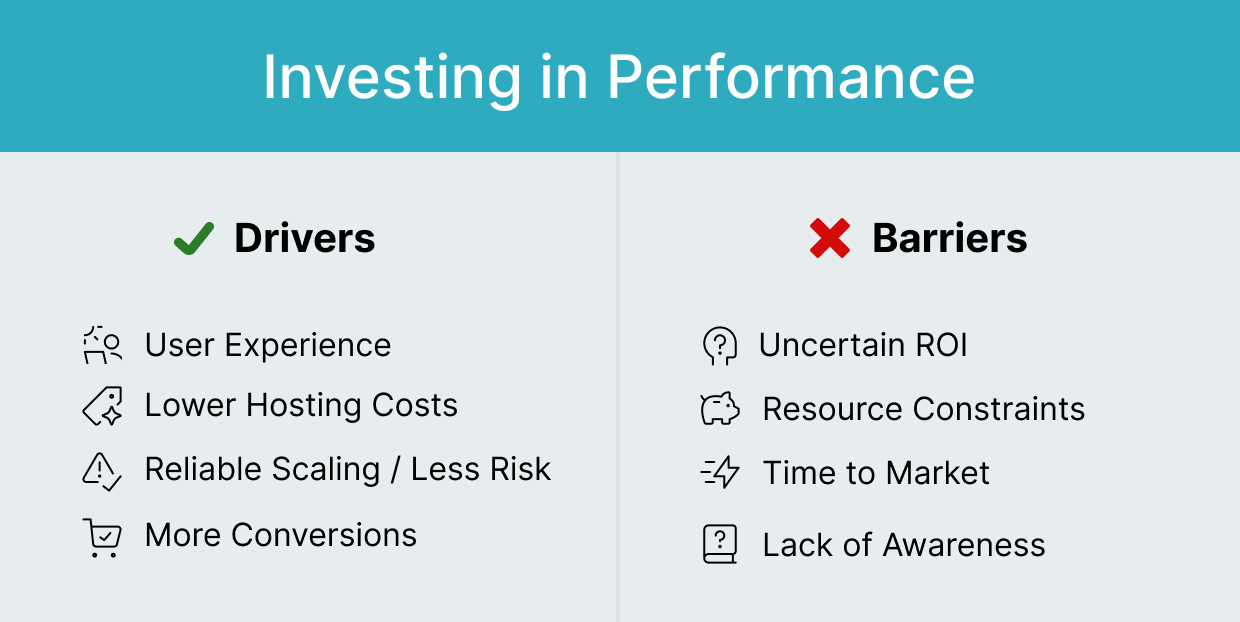

Why would a company invest the time and money to ensure their software is performant? Companies want better business outcomes. It’s not just about technical metrics. Here are some of the most common outcomes:

- Improved User Experience and Customer Satisfaction

- Cost Reduction and Efficiency

- Risk Reduction

- Scalability for Growth

- Competitive Advantage

- Accelerated Time to Market

- Increased Revenue

Let’s take a more detailed look at each of these motivations.

Improved User Experience and Customer Satisfaction

This is often the most direct and impactful benefit. Fast, reliable, and responsive software leads to happy users, which in turn drives customer retention, loyalty, and positive brand reputation.

Some companies believe in this reason more than others. It usually depends on market competition or if they already hold a monopoly (real or perceived).

Cost Reduction and Efficiency

Performance engineering is a strategic way to save money. By optimizing code and system architecture, companies can reduce the amount of infrastructure and computational resources they need to serve their users. This can lead to significant savings on cloud hosting and hardware costs.

We can see by the popularity of the FinOps Foundation that this is very important to companies built on cloud computing technology.

Risk Reduction

Rather than waiting for a system to fail under a heavy load, performance engineering integrates testing and monitoring throughout the development lifecycle.

This "shift-left" approach helps identify and fix bottlenecks early on, when they are much less expensive and time-consuming to resolve. It prevents costly and reputation-damaging outages.

Scalability for Growth

As a business grows, its systems must be able to handle increasing numbers of users and transactions.

Performance engineering ensures that an application is designed to scale effectively without sacrificing performance, allowing the business to grow without hitting technical limitations. It’s about preventing costly outages.

Competitive Advantage

In today's digital landscape, a fast and reliable application is a key differentiator. Companies with superior performance can attract and retain more customers than their slower, less dependable competitors.

Accelerated Time to Market

By catching performance issues early, performance engineering helps teams avoid last-minute delays and extensive re-work. This leads to faster and more predictable deployment cycles.

Increased Revenue

The benefits of a good user experience will directly translate into higher conversion rates and this equates to increased sales.

While these are all great reasons, the unfortunate truth is that companies can decide to make the wrong decision. In fact, companies can decide to go out of business due to poor decisions any time they want. All it takes is poor leadership and time.

Are There Good Reasons To Avoid or Delay Performance?

While the engineer in me would say there is never a time or reason where performance should not be the highest priority, this is because it has been my focus for over 30 years. So I am admittedly biased in how I feel about it. If I set that aside and put myself into the role of developer, executive management, or end user I may end up with another answer.

- Lack of Immediate, Tangible ROI

- Budget and Resource Constraints

- Organizational and Cultural Resistance

- Focus on Speed over Quality

- Lack of Knowledge and Awareness

- The "We'll Fix It Later" Mentality

Lack of Immediate, Tangible ROI

While the long-term benefits are substantial, the short-term return on investment can be difficult to quantify. It's often easier for management to justify spending money on new features that directly generate revenue than on a preventative measure that avoids future costs.

In my opinion, this is one of the biggest reasons companies do not invest in performance as a priority more than anything else. If the business does not see a huge return for making the software efficient, it may be more important to release it earlier to get more market share.

One of the biggest ongoing debates in the engineering world is “premature optimization” and where the line is where things that just be overengineered for no reason. To spend major money optimizing a birthday announcement website that is only for internal employees is probably not the best idea. Prioritizing speed for real-time financial trading on Wall Street - you will always be optimizing that. It’s about risk, revenue, and customer expectations.

Budget and Resource Constraints

Performance engineering requires specialized skills, tools, and dedicated time. Smaller companies, or those with tight budgets, may view this as an expensive luxury rather than a necessity. They may not have the financial resources or skilled personnel to implement these practices.

While this sounds reasonable, this can actually backfire. It’s another case of knowing when to apply specific performance engineering features. The least expensive way to get started is to shift performance left in the development cycle. Finding performance defects and getting immediate feedback when a new bit of code is being developed means fixing it earlier and saving money on the back end.

Open source tools can be used in this phase of development. While it may require more maintenance and setup time, it avoids licensing costs that are generally off limits for small companies. Shifting left is something even small companies can do and get performance gains.

Organizational and Cultural Resistance

In some companies, performance is seen as a reactive activity that happens at the end of the development cycle. It may not be integrated into the company's core culture or prioritized by leadership. It may be seen as disruptive.

If companies do not embrace “shifting left” as we mentioned earlier, a large integrated performance test could be seen as disruptive. However, performance engineering is more than just testing, and can be done without disruption.

Many times, I have helped tune a system by looking at the website in the browser “developer tools” and made recommendations based on my experience. This did not require a long, drawn out testing exercise and the tuning changes had a major positive impact on the application.

This is why front end website monitoring applications are so helpful. They identify variations in performance when the website updates new changes and sends alerts.

Focus on Speed over Quality

In fast-paced, high-pressure environments, the primary focus is often on delivering new features and products as quickly as possible. Performance and quality may be deprioritized in favor of speed to market.

There are cases where specific functionality is important to release, even when it may be additionally slow. It could be because users are demanding it. Perhaps the loss of a client is in the balance if the feature isn’t released. Perhaps pushing the release would create a contractual breach that is way more expensive than the cost of a poor performing feature.

It really goes back to the business and the risk. While unfortunate, there are times where the business has to overrule engineering for the best of the company overall.

Lack of Knowledge and Awareness

Many companies simply do not fully understand the scope and value of performance engineering. They may confuse it with basic performance testing or be unaware of the significant long-term costs associated with poor performance.

In the case of “we don’t know what we don’t know”, generally time and experience will eventually reveal the need for performance by way of user complaints. No one likes a slow application and there are many ways to publicly announce displeasure. In these cases, whoever handles customer success or customer support will usually receive the brunt of performance complaints.

This is an area where I believe some of the blame goes to the IT industry. There needs to be more emphasis on performance engineering concepts like queuing theory taught in schools with technical degrees (i.e. computer science) and at least have a chapter on how to address performance issues in software.

The "We'll Fix It Later" Mentality

This is a common and costly pitfall. Companies may believe that they can address performance issues after a product is launched if and when they arise. They are saying “OK” to technical debt. However, fixing problems in production is far more expensive and disruptive than preventing them in the first place.

Can this work? Actually, yes. Facebook famously held to the mantra “good enough is good enough” and they seemed to have scaled just fine over the years. There is quite a bit of information online around the “tyranny of perfectionism”. Many performance engineers tend to fall into this cult, and fail to account for times where “good enough” can actually work better than trying to release the perfect software candidate.

There is ALWAYS a tradeoff to be made between what is released and what should be released. The business should always be the leader of this choice, but based on what they know the customer wants. The question is whether the end user is going to be happy with “good enough” or will “good enough” create more business problems in the future.

My Experience: Motivations For Performance Improvements

What typically creates the motivation to address and prioritize performance?

For illustration, let’s separate software into a couple of general categories: front-end, customer facing websites and the back end software that it runs on.

The buyer for the front end software optimization is generally motivated to improve search engine optimization (SEO) and improving the return on marketing efforts. Companies that recognize better performing websites will increase conversions (and therefore revenue) will always invest in performance tuning and monitoring of their site. They understand it is a process of small changes and tuning tasks that make a huge difference over time.

Real example: The marketing department may want to put a great looking image on the front page of a website during a seasonal campaign. While the intention was to increase conversions for that sale, they did not realize the image was 50 MB in size! It wasn’t cached, and in a few hours, the site was down due to the number of requests pulling this large file off of the web server.

If the front end engineers had established a performance budget and had rules around file sizes, the outage would not have occurred, saving the company from the outage and revenue losses.

For the backend software, the motivation to spend money for better performance tends to be more about risk. Unfortunately, many performance engineering projects are started because a team has already been bitten by a performance defect and it turns out to be costly. That could be in the form of an outage or inefficient and expensive to run, or reputational driven by the complaints by users. Highly visible unhappy users (think social media posts) can result in losing customers and revenue.

Front-end performance optimization is often driven by marketing efforts. Backend performance gets prioritized to prevent outage.

There are industries where certain performance requirements must be met because it's an issue of regulatory compliance. The Financial, Healthcare, and Government sectors are good examples of this. They have to meet some form of performance service level agreements.

Depending on the circumstances they may decide to go with the lowest common denominator to achieve the compliance checkmark. Their motivation may be much different than commercial businesses in a highly competitive environment.

Where’s the value: why companies don’t see ROI in performance

We need to address the number one reason companies won’t spend money: no tangible ROI. At least some of the blame for this has to be placed on the performance community. In my opinion (based on my experience), we have not done a very good job telling the right story to the executive level. We have not shared the right vision.

For too long, consulting companies offered “senior performance engineers” at the lowest cost who were only familiar with how to use a specific tool and provide data. When this didn’t work out well, the consulting company tried to outsource a true performance engineer with real-world experience. The billing rates were higher, and that’s when the company asks, “If you were sending us senior engineers now who can actually address the problem, what were you sending us before?” Great question.

When the outcome of an engineering effort is only data, this can be perceived as having little value. For example, a team spends days or weeks automating and testing a version of software and reports results with no additional commentary, advice, or guidance. The data is only good until the next build or version is released.

Even when there is performance testing within a continuous integration pipeline, things can be missed if the only output is data without context and someone to make sense of that data. A checkbox is sometimes just a checkbox.

When there is no perceived return on the investment into performance tuning, it creates a culture where it’s OK to accept the risk of deployment. This may be fine, as long as the company is willing to live with the impact should that risk be realized. Eventually it happens, and the culture shifts back. Nothing causes change like a problem. And the cycle continues.

Risks and Long Term Value

My company had been working on several projects for a very large financial institution for months, seeing great progress improving several of their main applications. Because of our reputation, I received a call from the CTO for help with a specific performance issue that had plagued a group for months.

Without running any tests, and using only basic monitoring, I was able to isolate the problem to one specific file that had been left out of version upgrades for years. It was never overwritten and was completely out of date. It was a printer driver file (DLL) that was always locked and considered “in use” and therefore never replaced. As new drivers were required for new printers, the old driver would not work as well, and became extremely slow.

Once this file was replaced, the application began to run extremely fast because the printer driver was no longer a bottleneck. Unfortunately, they had already overspent on hardware by $500,000 trying to fix this before deciding to try performance engineering. They actually needed only 10% of the hardware they had acquired to fix this problem.

That doesn’t mean the entire company had developed a culture of performance. Around the same time, another team inquired about the cost of doing a load testing project on a web application. The new version of the application had already cost about two million dollars to deliver. A standard load test was quoted at $20,000.00 or 1% of the budget. The business team thought this was too high and declined to engage. The business had decided to accept the risk.

The application was released into production. Within 30 days their largest client cancelled their contract, citing slow performance and the top complaint. It cost them $6 million dollars in annual revenue to lose that client. All for $20,000. This is what I mean about accepting the impact, not just the risk.

Instead of the Chief Technical Officer, maybe we should be pitching the value of performance to the role of "Chief Risk Officer", because their mission is all about reducing risks while increasing efficiency.

Common Ways To Avoid Performance Testing

I see two common techniques that companies use to avoid investing into performance optimization:

- Throwing hardware at the problem

- Relying on observability

Throwing hardware at the problem

The most common way that organizations try to avoid performance engineering is throwing hardware at the problem first. The problem with this approach is that poor performant software will consume as much of a resource as it is allowed to have. It may take weeks for a reboot for that slow memory leak, but it’s going to happen eventually.

If this has happened, or if they are bound by regulatory compliance, they may get the cheapest labor with free tools to “check the box” that performance testing was completed. This is also usually a band aid until another performance defect makes it to production.

Relying on observability

Cloud-native and more modern software development teams may decide to use a combination of Observability solutions along with blue/green environments, or use rollbacks with kubernetes so that if a defect is discovered they just go back to the last version and continue to fix it in a staging environment.

More and more I see companies using observability to avoid performance testing unless they absolutely have to. In some cases this can work, but it depends on having a highly skilled set of software engineers at each stage of the software development lifecycle.

How to decide whether and how to invest in performance

If you are in the position right now of addressing performance in and application, and you are unsure in how to move forward - here are my recommendations:

- Look at your business objectives and what users expect from you.

- Do a quick Cost-Benefit Analysis and ROI computation

- Identify The Current System Bottlenecks and Scalability Needs

Look at your business objectives and what users expect from you

What is your main business objective? Improving user retention? Increasing revenue? Enhancing brand reputation? Take a hard look at which ones are important and determine how vital performance is to meeting those goals. Do you have users complaining, and if you make performance improvements will it directly address this pain?

Do a quick Cost-Benefit Analysis and ROI computation

This should be pretty straightforward. Add up the cost of the people, process, and tools required to reach your performance goals and contrast that to the potential benefits. That might be reduced server costs, increased customer lifetime value, or higher scalability before having to buy more infrastructure.

A very important calculation is the cost of downtime or an outage per minute, per hour, and per day. I am surprised at how many companies do not know this. Hint: it is usually VERY expensive.

Calculate that against improved response times and lower latency. That should make it easy to determine if you should invest, and how much. As a rule of thumb - about 10% - 12% of total budget should be set aside for performance engineering related effort. That includes more than just load testing.

Identify The Current System Bottlenecks and Scalability Needs

Find out what are your slowest applications. Is there one in particular, or is it a set of API’s? Are there known issues around high resource utilization or frequent crashes because the system just can’t handle peak load events?

You will want to prioritize investments in areas with the most significant bottlenecks first. Start with like database optimization and load balancing. Fix the biggest troublemakers first and get the lowest hanging fruit before making the smaller tuning tweaks. Start small and avoid over-engineering.

Monitoring is Still Key

Regardless of whether you are testing or tuning, monitoring is always going to be an important part of knowing how well applications are doing. For front end web applications, monitoring page load times, core web vitals, and mixing synthetic data with real user information is a great way to know the pulse of what’s happening.

Observability answers many new questions that APM (application performance monitoring) could not. There is still the need to know what’s happening with the infrastructure, but we also need to know how the application is doing as it aligns with the business goals of the company.

In both areas, continuous monitoring and continuous improvement bring the win. Continuous performance is easier said than done, so there is still a small percentage of companies doing it when compared to the overall number of companies that exist.

As we continue to move toward AI-powered software, it will become an enabler to make the continuous performance process easier and more accessible. We should be seeing more of this happening over the next 18 months.

Get Off The Fence

If your company has not made performance a priority, I can assure you that you are leaving money on the table. If being faster isn’t motivating, keeping more of your own money in your pocket should be. Efficiency brings with it cost savings. What a great side benefit!

What gets monitored gets done, so the first step is to find out what’s currently happening. Get a baseline of performance of your application and then determine what steps you need to take to make it the best it can be.

For websites, DebugBear can help you pinpoint the easiest changes you can make that will have the most impact. It can also help you dig deeper into some of the more challenging aspects of making improvements.

What motivates companies to invest in performance improvement can vary greatly. If for no other reason, think about your customer and what the reason is for the application in the first place. Putting the end user experience first is never a bad thing, and it is a great place to start.

Monitor Page Speed & Core Web Vitals

DebugBear monitoring includes:

- In-depth Page Speed Reports

- Automated Recommendations

- Real User Analytics Data