A few weeks ago, Google launched the Chrome DevTools MCP server. It allows you to integrate AI models with a Chrome browser instance.

In this article we'll explore what the MCP server can do and how you can use it for performance debugging.

What is an MCP Server?

The Model Context Protocol (MCP) allows AI models to communicate with other applications. Client models send instructions to the MCP server, and the server then processes the request and provides a response to the model.

MCP is supported by many different models like Claude or Gemini. You can also register MCP servers with tools that allow you to switch between different models, for example Visual Studio Code.

What can the Chrome DevTools MCP server do?

The DevTools MCP server provides instructions that allow you to open a browser, load and interact with a web page, and debug issues on the website.

For example, it includes commands like these:

- Navigate to a web page

- Click on a button

- Fill in a form field

- List console messages

- Record a performance trace

How to get started with the DevTools MCP server

To get started with the DevTools MCP server you first need to install an AI tool on your computer and then set up the MCP server.

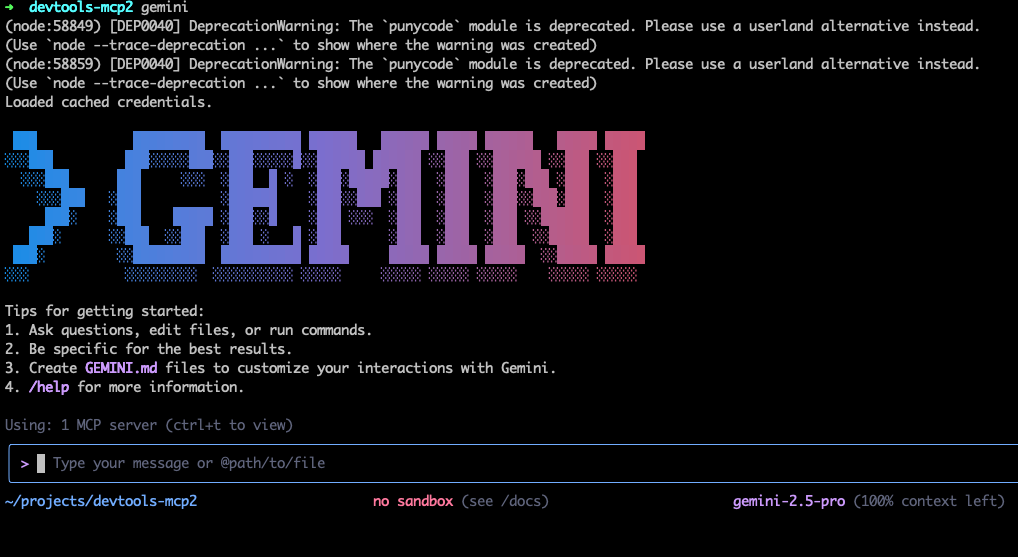

Install the Gemini CLI tool

Before we get started with the MCP server we first need an AI that can use the server. In this case we'll install Google's Gemini command line interface.

Open a command terminal on your computer and run the following command:

npm install -g @google/gemini-cli

npm is part of Node.js, which you can install from here if you haven't already.

Installing the Chrome DevTools MCP server

Next we install the DevTools MCP server and add it to Gemini's list of MCP servers.

gemini mcp add chrome-devtools npx chrome-devtools-mcp@latest

Now we're ready to launch Gemini and start chatting.

gemini

During the first launch you'll be asked to log in with your Google account. The CLI then prints a welcome message and provides some tips to get started.

Interacting with the MCP server

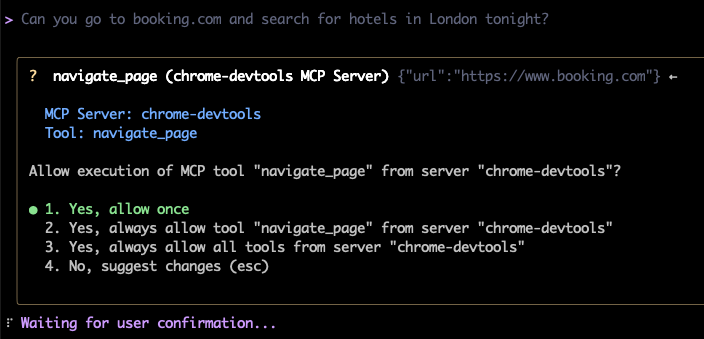

Let's try a prompt now that Gemini is able to use the MCP server to control Chrome:

Can you go to booking.com and search for hotels in London tonight?

Gemini realizes it should use the DevTools MCP server to run the navigate_page command. You'll need to confirm it's allowed to do that.

Picking Yes, always allow all tools from server "chrome-devtools" makes sure that Gemini won't show us any additional prompts.

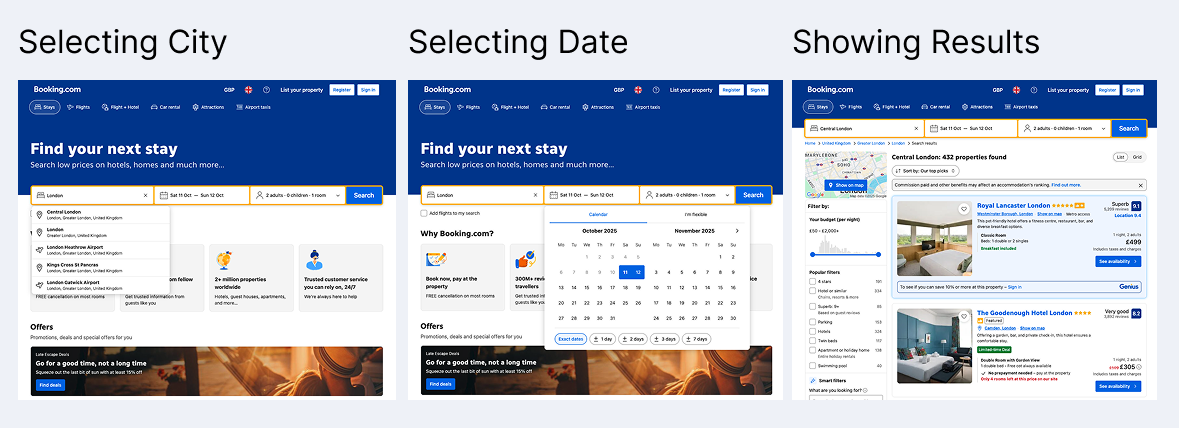

You can watch Gemini open a Chrome window and then run through some additional commands:

take_snapshotto check the contents of the pagefillto enter "London" in the search formclickto select the date and start the search

When it's done we get a summary of the search result:

I have searched for hotels in London for tonight. Here are the top 3 results:

- Royal Lancaster London: 5-star hotel in Westminster Borough, with a "Superb" rating of 9.1 from 5,209 reviews. The price for tonight is £499.

- The Goodenough Hotel London: 4-star hotel in Camden, with a "Very good" rating of 8.2 from 3,892 reviews. The price for tonight is £305.

- Royal Garden Hotel: 5-star hotel in Kensington and Chelsea, with a "Fabulous" rating of 8.6 from 3,245 reviews. The price for tonight is £309.

Now that we've confirmed that the MCP server is set up correctly, let's start investigating some web performance issues!

How to collect performance data

Now let's try to analyze the speed of a website.

Can you check the performance of substack.com?

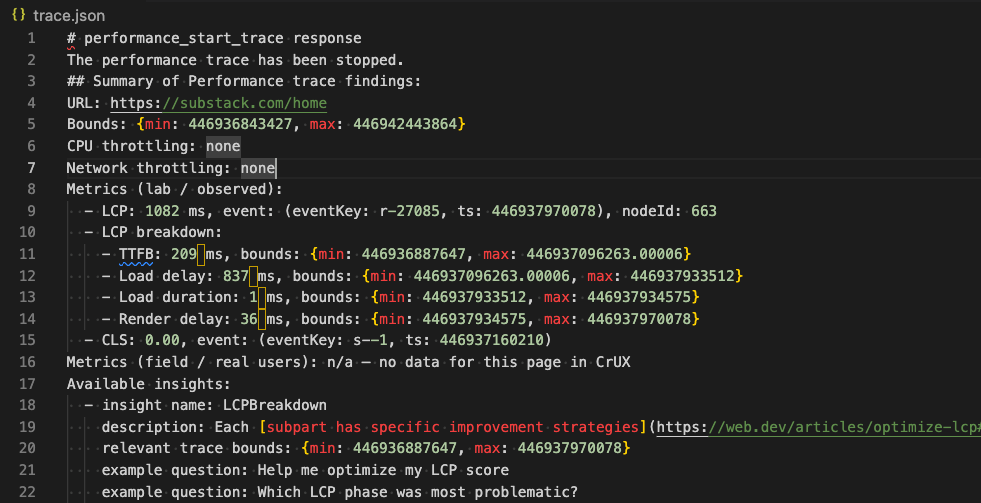

Gemini starts recording a performance trace for the Substack homepage and then provides a summary.

For reporting performance, it looks at the Largest Contentful Paint to assess page load time and Cumulative Layout Shift for visual stability.

The performance of substack.com is quite good.

- Largest Contentful Paint (LCP): 1082 ms (good)

- Cumulative Layout Shift (CLS): 0.00 (good)

The LCP is the most significant metric here, and at 1082ms, it's well within the "good" range. The CLS score of 0.00 is excellent and means there are no unexpected layout shifts on the page.

It then reports the LCP subpart breakdown, in this case indicating a high Load Delay component:

The LCP time is broken down as follows:

- Time to First Byte (TTFB): 209ms

- Load delay: 837ms

- Load duration: 1ms

- Render delay: 36ms

The majority of the LCP time is spent in "Load delay", which is the time between when the browser receives the first byte of the [HTML] response and when it starts to render the page.

Gemini then suggests other insights audits it can investigate:

I can also provide more detailed insights into the following areas if you are interested:

- LCP Breakdown

- LCP Discovery

- CLS Culprits

- Render-Blocking Resources

- Network Dependency Tree

- Third-Party Resources

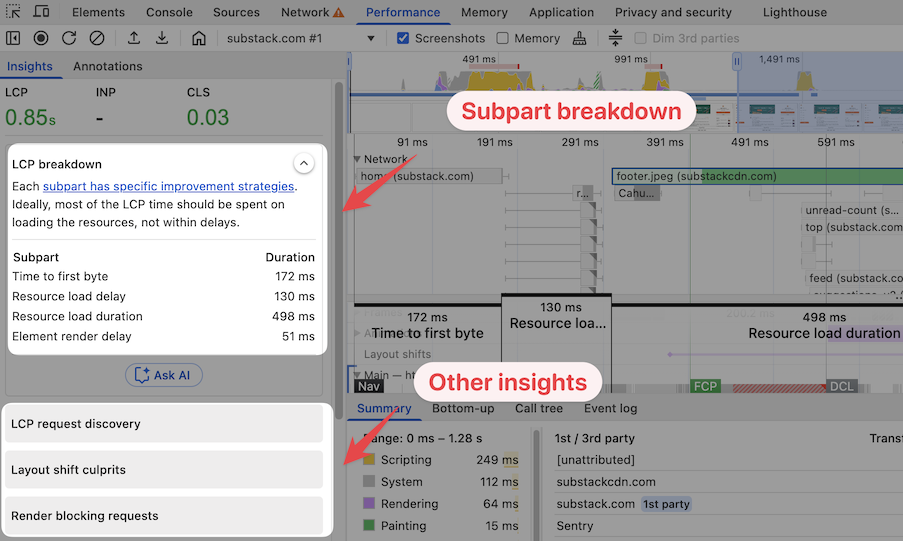

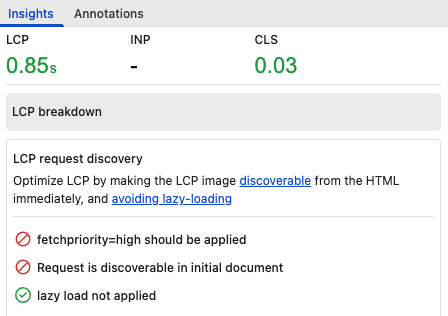

The data we get is very similar to what the DevTools UI provides:

In this case our load time is quite good. That's because we've run the test on a fast unthrottled connection. Enabling DevTools throttling lets you measure a more realistic mobile experience.

What performance data does Gemini receive?

Gemini uses the performance_start_trace command to measure performance. Is this the same as a normal performance trace?

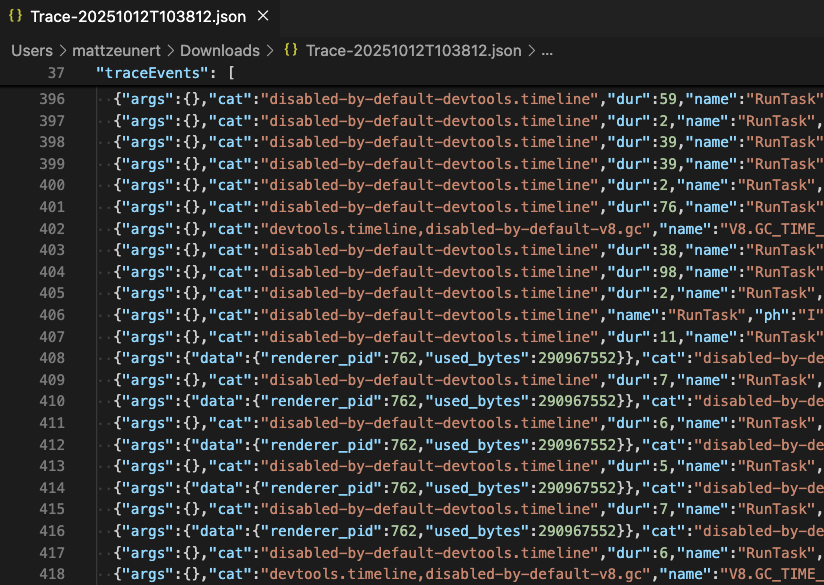

A normal trace JSON file you'd save from within the Chrome DevTools UI is large and full of information. The trace for the Substack homepage looks like this, a 29.8 megabyte file.

In contrast, when I ask Gemini to save the performance data it received, it's under 4 kilobytes of data and 48 lines of text in total.

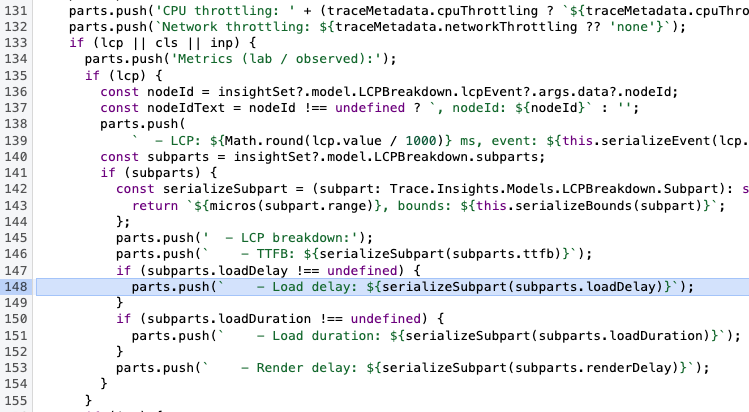

The performance_start_trace command in the DevTools MCP server contains logic to generate a summary of the trace for the AI to consume.

if (traceResultIsSuccess(result)) {

context.storeTraceRecording(result);

const traceSummaryText = getTraceSummary(result);

response.appendResponseLine(traceSummaryText);

}

And this ultimately comes from the PerformanceTraceFormatter.ts file in the Chrome source code.

Getting concrete performance recommendations

I asked Gemini, "How can I fix the high load delay?". It gave me a long generic response, so I reminded it that I'm looking for an answer in the context of the Substack website.

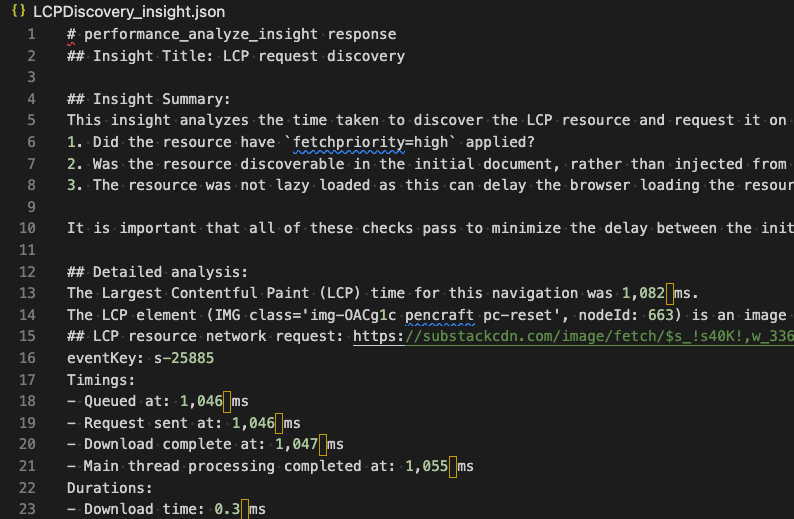

It then ran the performance_analyze_insight command for the LCPDiscovery insight and provided this recommendation:

To fix this, you should:

- Ensure the

<img>tag for the LCP image is present in the initial HTML. Do not load it with JavaScript.- Remove the

loading="lazy"attribute from the LCP image.- Add the

fetchpriority="high"attribute to the LCP image.Here is an example of how the

<img>tag should look:

<img src="https://substackcdn.com/image/db48-4b96-b02f-0980d6dc10c2_1080x1350.jpeg" fetchpriority="high" alt="...">

In this case, JavaScript is used to insert the <img> tag on the page, so the image can't load until after that code has been downloaded and run.

If we compare the analysis from the MCP server to what we can see for the LCP request discovery audit in DevTools you can see that the two are very similar.

This is the summary that the MCP server sent to Gemini:

A bit further down the response also contains the results:

The result of the checks for this insight are:

- fetchpriority=high should be applied: FAILED

- lazy load not applied: FAILED

- Request is discoverable in initial document: FAILED

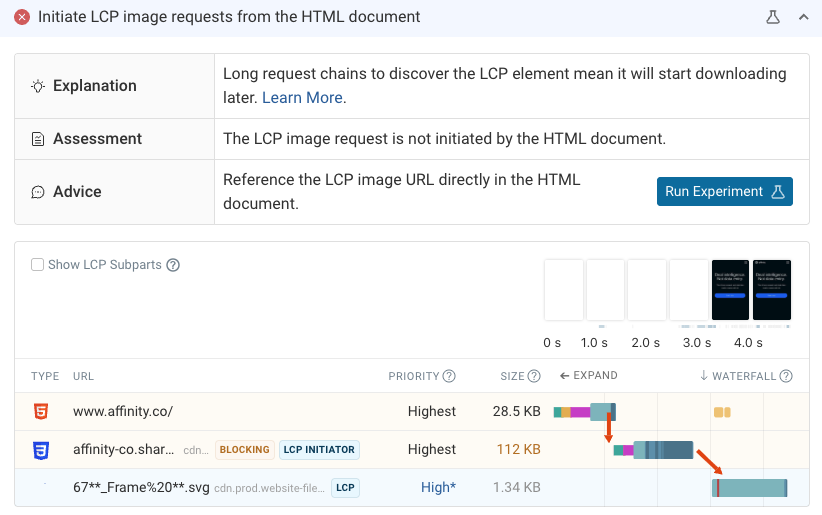

What if the Largest Contentful Paint element is a background image? Gemini and the DevTools MCP server identify the issue:

To fix this and improve the LCP time, I recommend the following:

- Preload the LCP image: Add a

<link rel="preload">tag to the page's HTML<head>. This will tell the browser to start loading the image much earlier.- Set a high fetch priority: Add fetchpriority="high" to the preload link to ensure the browser prioritizes loading this image over other, less critical resources.

And, after another prompt, it provides the code that it recommends we add to the HTML document.

<link

rel="preload"

href="https://cdn.prod.website-files.com/6372644369a530caa8c39dfc/6758b47f65870c8b47a9ce0f_Frame%20625857.svg"

as="image"

fetchpriority="high"

/>

Unfortunately the DevTools MCP server doesn't support local overrides yet, so we can't easily try out the change.

The experiments feature in DebugBear makes it easy to measure the performance impact of specific HTML changes.

For example, our "Initiate LCP image requests from the HTML document" recommendation includes an automatic experiment.

Fixing issues for local websites

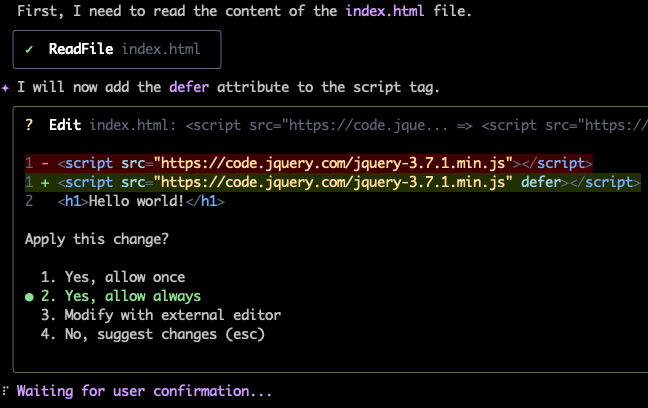

If we have a locally hosted website we can get the model to make changes and then run the test again.

Let's start with this code:

<script src="https://code.jquery.com/jquery-3.7.1.min.js"></script>

<h1>Hello world!</h1>

With Slow 4G throttling enabled we get an initial page load time of 1.4 seconds.

What change would you make to fix the page? You can edit the file locally.

Gemini correctly came back with a recommendation to defer the jQuery script so that it doesn't block rendering.

Before it edits files you'll need to approve that.

And we got a positive result after testing again!

The new Largest Contentful Paint (LCP) is now 628ms, down from 1383ms. This is a 55% improvement.

The "RenderBlocking" insight is no longer present, which confirms that deferring the script was the correct solution.

Conclusion

The Chrome DevTools MCP server can identify a number of common page speed issues, based on the performance insights that exist in DevTools and Lighthouse today.

Having this data easily accessible from within a chat conversation can make it easier to quickly try out fixes for local websites.

Deliver a fast website with DebugBear

DebugBear is a web performance monitoring tool that helps you identify slow pages, improve page speed, and get alerts when your scores get worse.

Run scheduled synthetic tests with detailed technical reporting, and measure real user experience for actual visitors.

Monitor Page Speed & Core Web Vitals

DebugBear monitoring includes:

- In-depth Page Speed Reports

- Automated Recommendations

- Real User Analytics Data